Improving Reporting of Sexual Harassment on Social Media: How Human-Like Features Can Make Users Feel Heard and Supported

Improving Reporting of Sexual Harassment on Social Media: How Human-Like Features Can Make Users Feel Heard and Supported

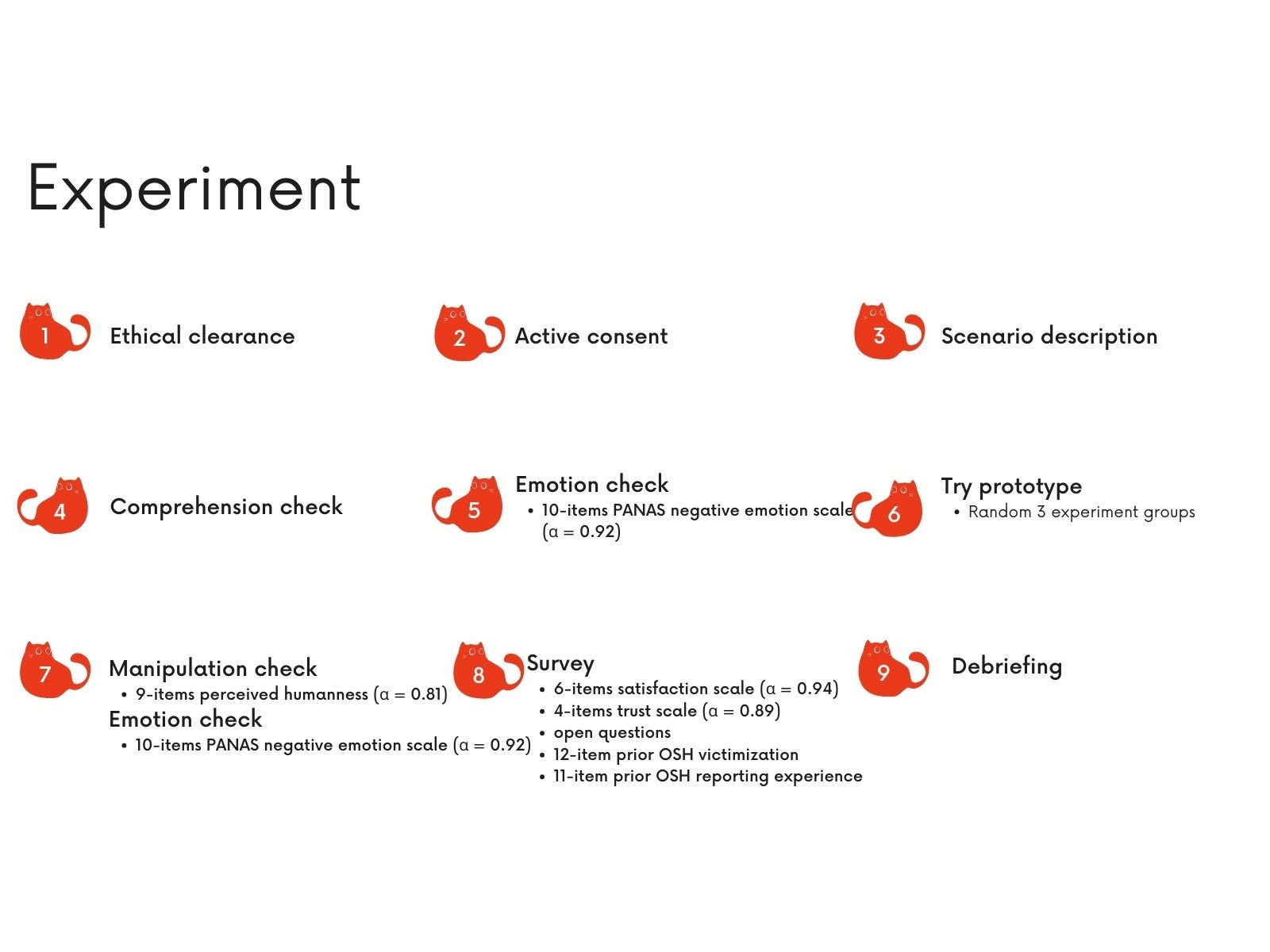

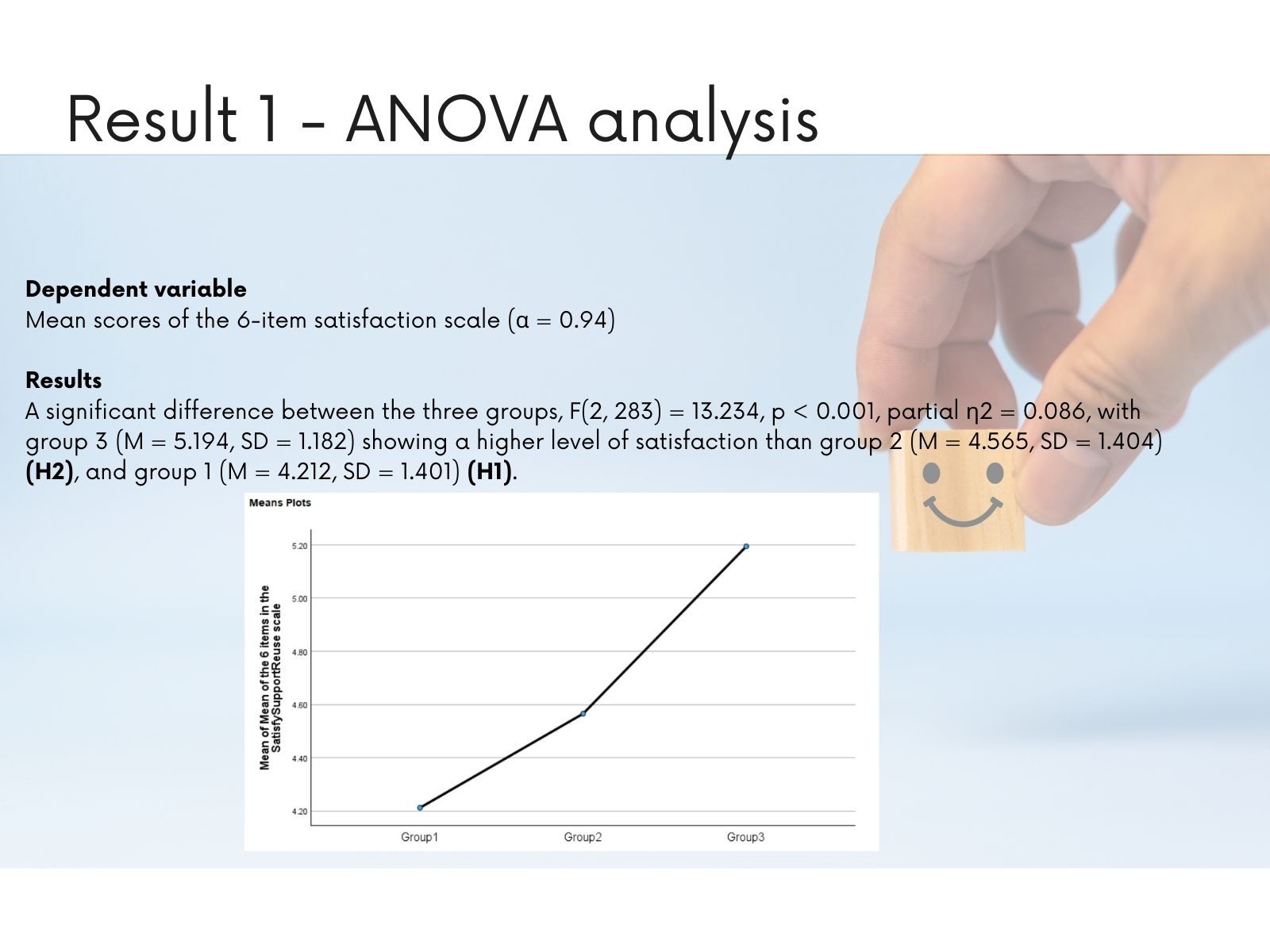

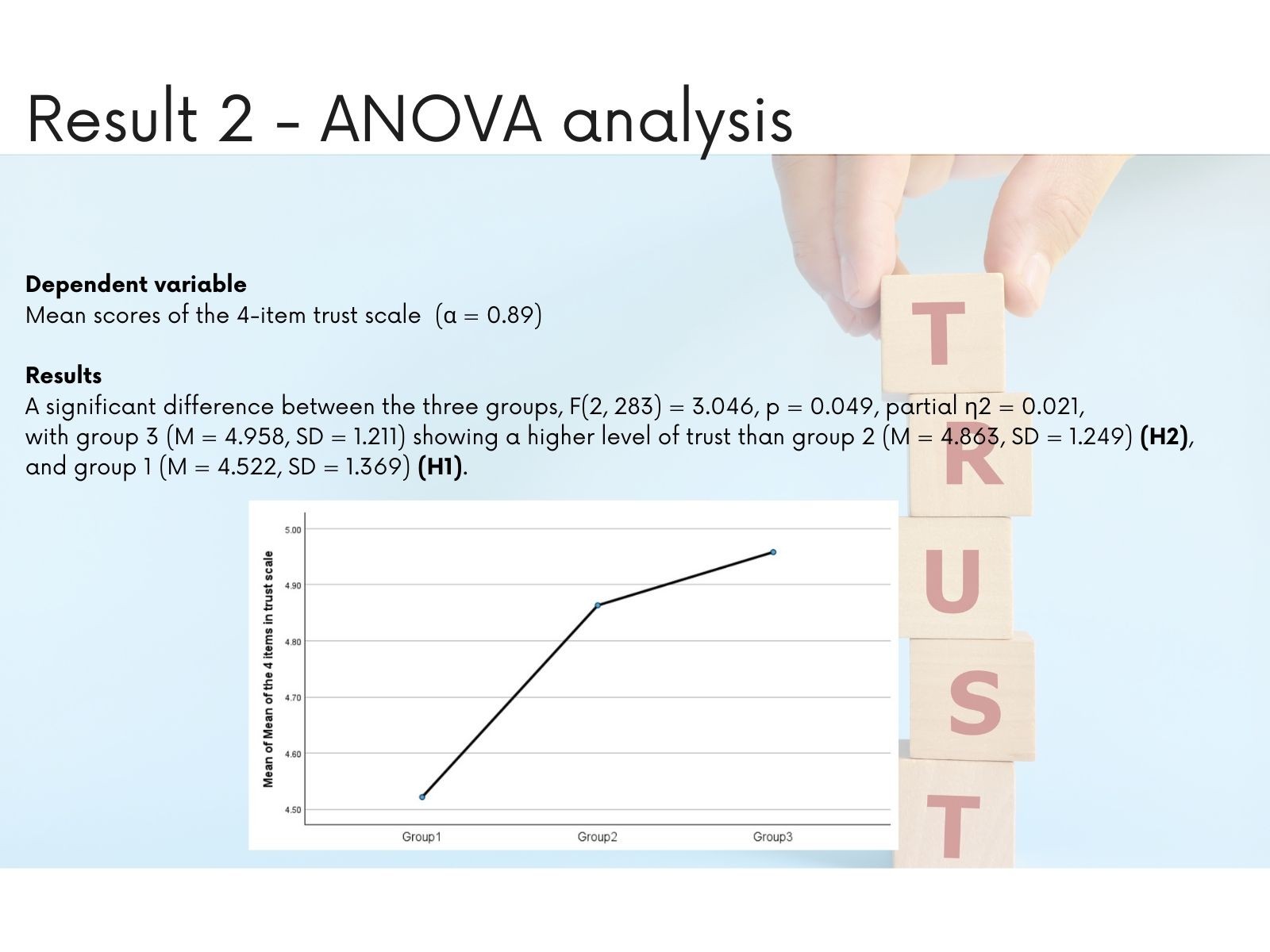

When people report sexual harassment or other harmful behavior on social media, they often feel ignored, frustrated, or emotionally drained. The process can feel cold and mechanical—just another form to fill out—when what they really need is empathy, clarity, and support. This study explores whether giving reporting systems more human-like features—like chatbots that feel interactive, personal, and empathetic—can make a difference. We asked 286 participants to imagine themselves as victims of online sexual harassment and to report the incident using one of three systems: - A basic, machine-like interface with no personal touches - A low human-like chatbot, with a few conversational elements - A high human-like chatbot, designed to be more engaging and emotionally responsive The findings were clear: both chatbot versions helped participants feel more comfortable, supported, and satisfied than the basic system. And the more human-like the chatbot felt, the stronger those positive effects became—users felt more heard, more respected, and more willing to trust the platform. This research highlights a key takeaway: designing reporting tools that feel more human isn't just a nice-to-have—it can make a real difference in how people experience and engage with safety systems online.

When people report sexual harassment or other harmful behavior on social media, they often feel ignored, frustrated, or emotionally drained. The process can feel cold and mechanical—just another form to fill out—when what they really need is empathy, clarity, and support. This study explores whether giving reporting systems more human-like features—like chatbots that feel interactive, personal, and empathetic—can make a difference. We asked 286 participants to imagine themselves as victims of online sexual harassment and to report the incident using one of three systems: - A basic, machine-like interface with no personal touches - A low human-like chatbot, with a few conversational elements - A high human-like chatbot, designed to be more engaging and emotionally responsive The findings were clear: both chatbot versions helped participants feel more comfortable, supported, and satisfied than the basic system. And the more human-like the chatbot felt, the stronger those positive effects became—users felt more heard, more respected, and more willing to trust the platform. This research highlights a key takeaway: designing reporting tools that feel more human isn't just a nice-to-have—it can make a real difference in how people experience and engage with safety systems online.

Category

May 15, 2024

UX Evaluation

UX Evaluation

Services

May 15, 2024

UX Research

UX Research

Client

May 15, 2024

NETHATE

NETHATE

Year

May 15, 2024

2024

2024